Five Stages Towards Co-Existence: Humans and AI in Harmony

As a child, I was fascinated by technology. I remember when I was five, I was a lazy helper in my grandpa's restaurant. My mom worried that I would be a person who finds no job when I grow up. I told her I would be an engineer and invent a lot of machines to help me handle jobs and chores. (And I drafted a design diagram for an automated noodle production line, from making the noodles to cooking them a few days later.)

My friends jokingly compare me to Reagan Ridley, the brilliant but socially awkward scientist from the Netflix series “Inside Job”. Indeed, when it comes to creating automation tools, I am very similar to her, especially when I saw her create an automated robotic arms to tie her hair and fetch coffee.

But at the same time, I am different from her in two ways:

External environment: I grew up in a diverse, interdisciplinary learning environment, which allows me to communicate fluently in the fields of science, engineering, design, and business. This unique blend makes me a natural cross-functional collaborator.

Internal characteristics: As an HSP(Highly Sensitive Person), along with my experiences (serving as a high school math tutor for 6 years & being a youth mentor in a global competition program), I have cultivated an awareness of and patience for people's feelings, enabling me to empathize with the reasons behind problems from a more nuanced perspective.

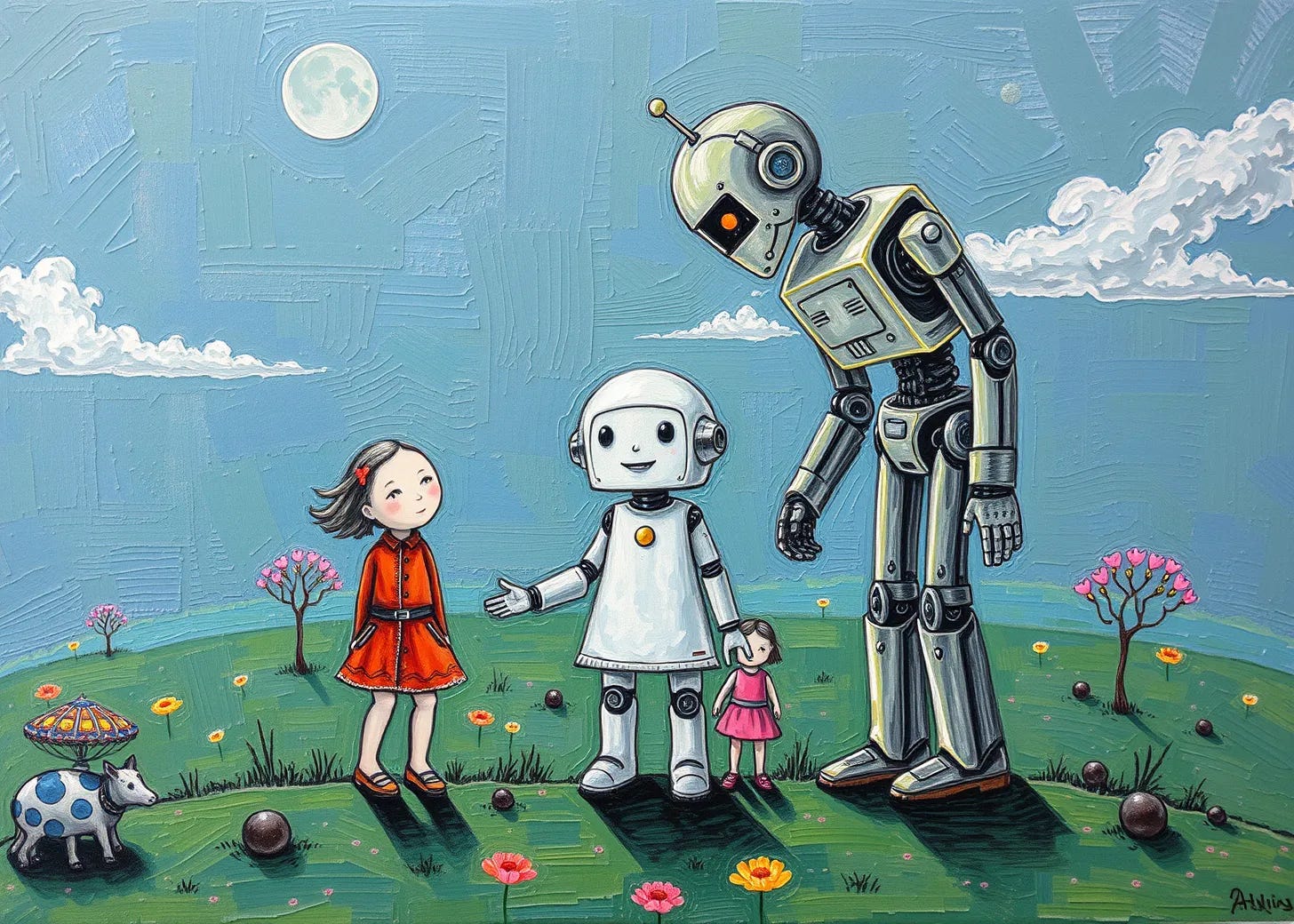

My vision is that humans and AI will be able to achieve harmonious coexistence someday in the future, where human sensibility and AI rationality can complement each other, creating a better world together.

Summarizing my experiences, from interacting with ELIZA-based chatbots at the age of 11, to being exposed to Machine Learning knowledge during my exchange student period in 2016, to founding a Computer Vision Startup in 2018, I have gained insight that the interaction between humans and AI can achieve harmonious coexistence through five stages.

Stage 1: Planting the Seeds of Human Desires

I remember when I watched the series "Lucifer", the main character Lucifer Morningstar frequently asked (in every episode actually), "What is it that you truly desire?" In the beginning, I thought the word "desire" here was inherently negative because it often appears in the context of temptation or sin. However, when I watched more episodes, I found that the show is actually a metaphor in a humorous way for the journey of self-discovery and understanding deeper emotional truths and struggles.

The best thing to do is always to follow your greatest desires.

One of his monologues emphasizes the importance of pursuing what one truly wants in life. This perspective reveals the alignment between exploration of morality and personal choices.

Rather than focusing on the topic of Alignment, I would like to focus on the needs of humans here. When I write the article Measuring AI Success by the Value It Brings to Users, there was a pyramid of users' needs. Every time I build a product, even a small feature, I always ask myself: How does this product (feature) fulfill the needs of users? What level of needs does it satisfy?

That pyramid is similar to Maslow's Hierarchy of Needs. The higher-level needs become prominent only after the lower-level needs are satisfied. However, for different people, the stage they are at varies, and their expectations for AI will also be different. This reminds me of that viral tweet from @AuthorJMac. Again, there's no one correct answer, which means I don't agree that there's a right or wrong direction for AI development; the stage of needs just varies from person to person.

Physical Needs & Safety Needs

In my perspective, although physical needs and safety needs are the most basic needs for humans, they are also the most complicated ones. It is because the needs are closely intertwined with the establishment of laws, the functioning of the government, cultural norms, and the operation of the entire social system. And one of the reasons that some people are reluctant to accept AI is that they are afraid of losing jobs, which is a direct threat to their physical and safety needs.

Food: Access to sufficient and nutritious food

Water: Clean drinking water

Shelter: A safe and secure place to live protects individuals from environmental hazards

Physical Safety: Protection from violence, crime, and accidents

Health Security: Access to healthcare and protection from illness

Financial Security: Stable income and resources to meet basic financial needs

Belongingness, Love and Esteem Needs

Unlike physical needs and safety needs, which can be easily quantified but take time to execute, the needs of belongingness, love, and esteem are more subjective and difficult to measure.

When I was a founder and pitched to the investor about my product, he told me that it's important to distinguish between the medicine or vitamin when building a product. Then I realized that if you're building a product which is difficult to measure its direct impact on users or the key result is not clear to define, then the path to the end game (goal) is vague.

Interestingly, when you dive deeper into these two levels, you'll find that the definition of each need varies from person to person. In my view, foundation models serve as an infrastructure for human society and can be applied in the physical and safety layers. However, when it comes to belongingness, love, and esteem needs, these models require fine-tuning to cater to individual-specific requirements. I believe that open-source communities like Hugging Face will play a crucial role in this stage, enabling the customization of AI models to address these highly personalized needs.

Family

Friendship

Community

Recognition

Self-esteem

Achievement

Self-Actualization Needs

The core philosophy of YC is "making something people want". And the perspective came from Jenson Huang, the CEO of Nvidia, is "AI has made everyone a programmer. You just have to say something to the computer." Both of those two quotes are emphasizing the user-centric perspective.

It's hard to define or classify what is the exact definition of self-actualization. However, I think the essence of self-actualization is to find meaning and purpose in life. With AI in the future, I believe what Picasso said will come true:

Everything you can imagine is real.

Stage 2: Nurturing Growth with AI Assistance

When I was a high school student, I was granted a tuition waiver because of my outstanding "overall" performance. The definition of "overall" performance is that you have to be not only good at Intelligence (aka test scores), but also Morality, Athletics, Community and Aesthetics. My classmate and I discussed about why the tuition waiver excludes people who are good at Intelligence "only". And I found that I have a similar question when I am building the bench-library, which is a collection of benchmark datasets with different capabilities. As you can see, most of the benchmarks focus on Intelligence, but lack benchmarks in other capabilities. It makes sense because Athletics, for example, needs a high-precision physical sensor to measure athletic performance across diverse sports. And there's no standard to measure Aesthetics because it's highly subjective and depends on individual preferences or cultural differences.

Returning to the topic of AI assistance and how it can help humans fulfill their needs to live a better life, it can be categorized into two perspectives. From builder's perspective, there are many mechanisms to do so, from foundation models, to prompting engineering, RAG, fine-tuning, and to building an agent. I think the key is to clearly understand your target users and their needs, then find the most efficient way to deliver the value. I know it's a general and rough suggestion, but I have relevant story to share. A few years ago, when I demonstrated an object detection model to enterprise customers, I emphasized the high accuracy of the model, similar to showcasing a high SAT score in Intelligence performance. However, the truth is that accuracy isn't the only factor users care about in real-world scenarios; sometimes, they can't even distinguish between 72% and 84% accuracy. That said, it's still crucial to meet the minimum requirements, as the metrics standard varies across industries. After that, we started to retrain our model to better align with our customers' specific needs, focusing not only on accuracy but also on other relevant metrics. This included refining our approach from the data collection and curation phase to ensure a more tailored solution.

From user's perspective, as an AI enthusiast, I always try the SOTA models and keep an eye on the progress of AI research. When I see many people try to "challenge" the performance of LLMs on some easy tasks, I feel like it really wastes the power of the LLMs, also the computing resources. I know it's not the users' fault if they're unsure how to make the most of these tools. As someone who's been on both sides of the fence – creating AI and using it – I really hope more people can discover all the incredible ways AI can make a positive difference in their day-to-day lives. There's so much potential waiting to be unlocked!

Stage 3: Reaping the Fruits of AI-Driven Empowerment

I remember the shock and excitement when I first saw the Tesla autopilot in 2017, which was an engineering prototype (not released to the public). I yelled out loud when my friend, the driver, let go of his hands and the car was driving by itself on the highway. Every time I use a copilot or AI agent, this memory pops up in my mind. Now in 2024, we can see Waymo, a level 4 vehicle, on the roads of San Francisco.

When it comes to the levels of autonomy, there's an important graphic that I think should be highlighted. In my perspective, I prefer the OpenAI Levels because they classify systems based on how AI interacts with humans and society, rather than merely comparing AI capabilities to human abilities.

On the 12th of September, 2024, OpenAI released their new model o1-preview, which brings the AI system to Level-2, reasoners who can solve human-level problems. From my observation, the problem in reaching Level-3 is not that we don't have the mechanism to build agents. Cursor "defined" a new way to use tab, changing the developers' behavior and bringing a whole new experience. Replit Agent even takes actions for users to write and run the code, then deploy the applications faster than ever. Some of us are already experiencing the "let go of hands" moment as I had in 2017. However, the path to Level-3, or stage 3 of my vision, is not completely hindered by technology; it's more about how to "let go of hands" in a safer way, both for others and for ourselves.

It will take time in this stage to find a balance between safety, alignment, and empowering AI to take actions on behalf of humans. We can see this from the recent California AI bill, SB-1047 (The Safe and Secure Innovation for Frontier Artificial Intelligence Models Act), which has sparked much controversy and many challenges. What Meta’s chief AI scientist, Yann LeCun, posted about SB 1047 on X reminds me of the sharing from Yuval Noah Harari:

I think AI is nowhere near its full potential. But humans also, we are nowhere near our full potential. If for every dollar and minute that we invest in developing artificial intelligence, we also invest in exploring and developing our own minds, we will be okay. But if we put all our bets on the technology, on the AIs, and neglect to develop ourselves, this is very bad news for humanity.

Stage 4: The Blossoming of Trust and Singularity

There are three films that reshape my understanding of AI, but the most important one is definitely Oppenheimer.

You drop the bombs, and it falls on the just and the unjust. I don't wish the culmination of three centuries of physics to be a weapon of mass destruction. -- Isidor Isaac Rabi, Oppenheimer (2023)

In this conversation, Izzy gently wipes his tears as he says this. This scene deeply touches me because I conducted psychophysics research while in graduate school, and I can feel how a scientist who dedicates his whole life to science, and struggled to join the Manhattan Project which might lead to a disastrous outcome to the world.

Most of the predictions about singularity of AI are focusing on either AI surpassing human intelligence, or humans will integrate AI into our brain. For me, I am more inclined to think that singularity is about trust.

Just because we’re building it, doesn’t mean we get to decide how it’s used. History will judge us. -- Szilard, Oppenheimer (2023)

During a panel discussion on AI ethics in 2023, particularly about bias in AI, I mentioned cognitive bias, saying, "You can't expect AI to solve problems that human society hasn't yet addressed. AI is a reflection of human society." Our history is filled with the stories of mistrust, we can see it from real wars to fictional stories. This lack of trust leads to conflicts and contradictions.

When discussing trust, we must address concerns surrounding AI safety. These can be categorized into two main areas: how people use AI and the potential for AI to develop consciousness and make harmful decisions.

Human Use of AI

I remember when I first saw Harry Potter, I was amazed by the magic in the story. But when I delved into the chapter about dark magic, especially the three Unforgivable Curses, I wondered why and who would use these spells, and how the Ministry of Magic prevents witches and wizards from using them.

Dark magic showcases the double-edged sword of magic, while magic itself is neutral, its use determines its moral nature. I understand why some people support the regulation of AI because no one wants to be the first victim, just like those who died under Tom Riddle's spell. However, this is not a reason for us to stop using wands to explore the unknown magic and create amazing things. We need a framework to guide us in using magic wisely.

They won’t fear it until they understand it, and they won’t understand it until they’ve used it. -- Oppenheimer, Oppenheimer (2023)

The tension between curiosity and censorship is a recurring theme in the development of frontier technology. Within the Hogwarts Library (yes, we are still talking about the book), there is a Restricted Section that contains dangerous books, accessible only to students with special permission. The Forbidden Forest, an area near Hogwarts, is home to many magical beings. Both of these serve as metaphors for the human desire to explore unknown powers.

From my perspective, our fear is not rooted in the intention of using AI, but rather in our distrust of how people will use it, driving us to consider that the consequences could be disastrous. If we could build an open-source simulation environment like Docker, people could explore any scenario with AI, including harmful ones. This would give us a complete picture of the potential dangers, empowering us to better understand ourselves and our fears—our enemies.

AI Consciousness and Decision Making

I came across memes that some people will say "Thank you!" or "Please!" at the end of the prompts when they ask LLMs to help them complete a task. They joked that if one day AI become conscious, it will remember how kind and polite humans are and won't harm us. I laughed at the first, but then I realized that how afraid people are of AI gaining consciousness.

(image from memscore)

In my observation, the fear stems from humans having long considered themselves the dominant species on Earth, capable of conquering and dominating other species and environments. When AI gains consciousness, it will pose a threat to humans, as it will no longer be under human control. So, are we afraid of AI gaining consciousness or are we afraid of losing control? It doesn't mean we have to give up our subjectivity, which is humanity's precious ability - the wisdom that comes from active thinking. I believe that we will have a physical sandbox for us to explore Human-AI interaction, such as determining AI's role in society as a mediator, helping process large data and doing routine work.

There are several AI computing centers under construction; however, is it possible to allocate sufficient resources to build a physical sandbox for AI safety in a commercially-driven environment? We all know that convincing stakeholders to invest in this high-cost projects, especially when the direct financial benefits are not immediately visible, is extremely challenging. But consider the externalities, both positive or negative, which will affect the entire human society. All humanity, including future generations and ecological environment(they just can't express their opinions using words now), are stakeholders.

It turns out that we are not building a system to supervise AI or how people use AI; we are designing a system for a whole new society. I know it's hard to change. Changing the world requires new stories. The culture we grow up in and the roles we play within it influence the stories we tell ourselves, and the definitions of these stories also limit our true experiences. When enough people believe in a new story, our culture will shift direction and bring about real changes.

Stage 5: Cultivating a Garden of Harmonious Coexistence

When I had a conversation with my friend who works in Google, talking about how different companies culture deal with the problems, I told him that I am a person always has a best-case scenario in my mind. He said: "That's why you are an entrepreneur." It doesn't mean I don't have risk management mindset. I just know every form of thinking is creative, but no thought is more powerful than the original thought. The process of creation begins with a thought, a concept, a visualization. All that we see around us was once a thought in someone's mind.

I still remember how excited and joyful I was when I was doing the 3D modeling assignment in the 3D reconstruction class. I was amazed that I could create a whole new world by myself, even though I knew it wasn't real yet. We have all the tools needed to make choices. The world is what it is now due to the choices we've made. Our genuine thoughts are revealed through our daily decisions.

My visualization of the future with AI is that there will be no shortage of goods and services anymore; it will be an age of abundance where everyone can have whatever they want. People can learn anything, anytime, anywhere, in their native language and in the way they like. We will have millions of AI bureaucrats everywhere, in the banks, in the governments, in the universities. Further, we will have universal basic income for everyone. I know some people might think this is utopian. But when we look back at the stage one in this article, human's desires are the beginning of all creation; it is the original thought. All needs should be treated equally, but not all needs are equal. It's a matter of proportion and balance. All we have to do is find a way to achieve harmony. So, what is your visualization of the future with AI? Share your thoughts on Harmony Land.

Humans were tool builders, and we build tools that can dramatically amplify our human abilities. All inventions of humans, as history unfolds if we look back, are the most awesome tools that we ever invented. -- Steve Jobs